IT system integration is the architectural discipline of connecting disparate software applications, data sources, and services to operate as a single, cohesive system. It transforms a collection of isolated digital tools into a unified, functional whole. Without a deliberate integration strategy, businesses are left wrestling with data silos, inefficient manual workflows, and brittle, unmaintainable software.

This guide provides a pragmatic overview for founders, CTOs, and technical leaders on how to approach IT system integration not as a technical chore, but as a core component of a resilient and scalable digital architecture.

The Problem: Fragmented Systems as a Business Bottleneck

In any modern enterprise, software does not operate in a vacuum. The CRM must exchange data with the marketing automation platform. The billing system must synchronize with the ERP. Analytics platforms must pull data from dozens of sources to generate meaningful insights.

IT system integration is the engineering practice that makes these connections possible, reliable, and secure.

Viewing integration as a series of ad-hoc technical tasks is a common and costly mistake. A fragmented digital ecosystem creates operational friction that directly impacts the bottom line. When systems cannot communicate programmatically, staff are forced into manual data entry, which introduces errors, reduces productivity, and leads to decisions based on stale or incomplete information.

The Solution: Integration as a Strategic Architectural Layer

A strategic approach to integration creates a unified operational fabric. The benefits are tangible and directly support core business objectives:

- Improved Operational Efficiency: Automating data flows between applications eliminates redundant manual work, freeing up teams to focus on value-creating activities.

- Enhanced Data Integrity: Synchronizing data across systems establishes a single source of truth, ensuring that business decisions are based on accurate, consistent information.

- Increased Architectural Agility: A well-designed integration layer allows for the addition or replacement of applications with minimal disruption, providing the flexibility to adapt to market changes.

- Cohesive Customer Experience: Connecting sales, support, and product systems provides a 360-degree view of the customer, enabling personalized and consistent interactions across all touchpoints.

A naive approach—simply patching systems together as needed—inevitably leads to a brittle and unmanageable architecture. True value comes from treating integration as a deliberate, foundational component of your digital strategy.

Market trends underscore the criticality of this discipline. The global system integration market is projected to more than double from $360.705 billion in 2019 to $708.747 billion by 2026, a surge driven by cloud adoption and IoT. This growth highlights a simple reality for technical leaders: effective integration is the backbone of a resilient, competitive business. You can find more insights in the full market research on Business Wire.

Choosing an Integration Architecture: A Blueprint for Scalability

Selecting an integration architecture is analogous to choosing the blueprint for a building. The upfront decision dictates its structural integrity, future extensibility, and long-term maintenance costs. A shortsighted choice results in a brittle system that is difficult and expensive to modify. The right choice creates a foundation for genuine business agility.

Every pattern for IT system integration involves trade-offs in complexity, cost, and flexibility. Understanding these trade-offs is a critical responsibility for any leader building a connected digital ecosystem.

This diagram illustrates a key principle: integration is not a peripheral task but the central hub enabling core business outcomes like efficiency, data consistency, and growth.

The Risk of Point-to-Point Connections

The most basic integration approach is the point-to-point connection, where two systems are directly linked. For a simple, isolated task like connecting a CRM to a single marketing tool, this method is fast and straightforward.

However, this simplicity is a trap. As the number of systems grows, the number of point-to-point connections explodes quadratically. Connecting just five systems requires ten separate, custom-built links. This inevitably leads to “spaghetti architecture”—a tangled, undocumented web of dependencies that is nearly impossible to debug, modify, or secure. Each new system added makes the entire structure exponentially more fragile.

A system built on point-to-point connections is a ticking time bomb of technical debt. It sacrifices long-term maintainability for short-term convenience, a trade-off that rarely pays off.

The Trade-offs of Centralized Hub-and-Spoke Models

To address the chaos of point-to-point connections, the hub-and-spoke model emerged, often implemented with an Enterprise Service Bus (ESB). In this pattern, all communication is routed through a central hub that handles data transformation, routing, and protocol translation.

This approach imposes order. Instead of connecting every system to every other one, each application requires only a single connection to the central hub. This simplifies management and provides a single point for monitoring and control. However, this centralization introduces its own set of problems:

- Single Point of Failure: If the central hub fails, every integrated process across the organization grinds to a halt.

- Performance Bottleneck: As data volumes and the number of connected systems increase, the ESB can become a chokepoint, degrading performance across the entire architecture.

- Vendor Lock-in and Complexity: ESB solutions are often proprietary, monolithic, and expensive, requiring specialized skills for maintenance and operation.

While the hub-and-spoke model offers discipline, it often creates a monolithic bottleneck that stifles agility.

Modern Decoupling with API-Led Connectivity

A more modern and flexible approach is API-led connectivity. This strategy organizes integrations into three distinct layers of APIs, treating each integration as a managed, reusable product:

- System APIs: These act as gatekeepers, providing secure and consistent access to core backend systems (e.g., databases, ERPs, legacy platforms). They unlock data without exposing underlying implementation complexity.

- Process APIs: These are the orchestrators. They compose data from multiple System APIs to execute a specific business process, such as “process customer order” or “onboard new employee.”

- Experience APIs: These are the consumer-facing layer. They package data from Process APIs and format it for a specific end-user channel, whether a mobile app, a web portal, or a partner’s system.

This layered design promotes reuse and decouples systems. If a CRM is replaced, only its corresponding System API needs to be updated. The Process and Experience APIs that consume customer data remain untouched, dramatically reducing the cost and risk of change. This architectural style is a cornerstone of modern approaches like Service-Oriented Architecture (SOA), which emphasizes building systems from loosely coupled, independent services.

Comparison of Common IT Integration Patterns

The optimal pattern depends on your specific context, including scale, team capabilities, and long-term business goals. There is no single “best” answer, only the most appropriate fit for the problem at hand.

| Integration Pattern | Best For | Key Advantage | Primary Risk |

|---|---|---|---|

| Point-to-Point | Simple, two-system integrations with a limited lifespan. | Speed. It’s the fastest way to connect A to B for a one-off need. | Unmanageable Complexity. Creates a brittle “spaghetti architecture” that is impossible to maintain at scale. |

| Hub-and-Spoke (ESB) | Centralising control in large enterprises with many legacy systems. | Centralised Governance. A single point for control, monitoring, and applying policies. | Monolithic Bottleneck. The central hub becomes a single point of failure and can hinder performance and agility. |

| API-Led Connectivity | Building a scalable, agile, and reusable integration fabric. | Decoupling & Reusability. Promotes agility by isolating systems and treating integrations as reusable products. | Higher Upfront Investment. Requires a strategic commitment to API design, management, and governance. |

The ultimate goal is to build a system that can evolve with the business, not constrain it. These patterns offer different paths to achieving that goal, each with a unique balance of short-term velocity and long-term resilience.

Navigating Critical Integration Risks from an Engineering Perspective

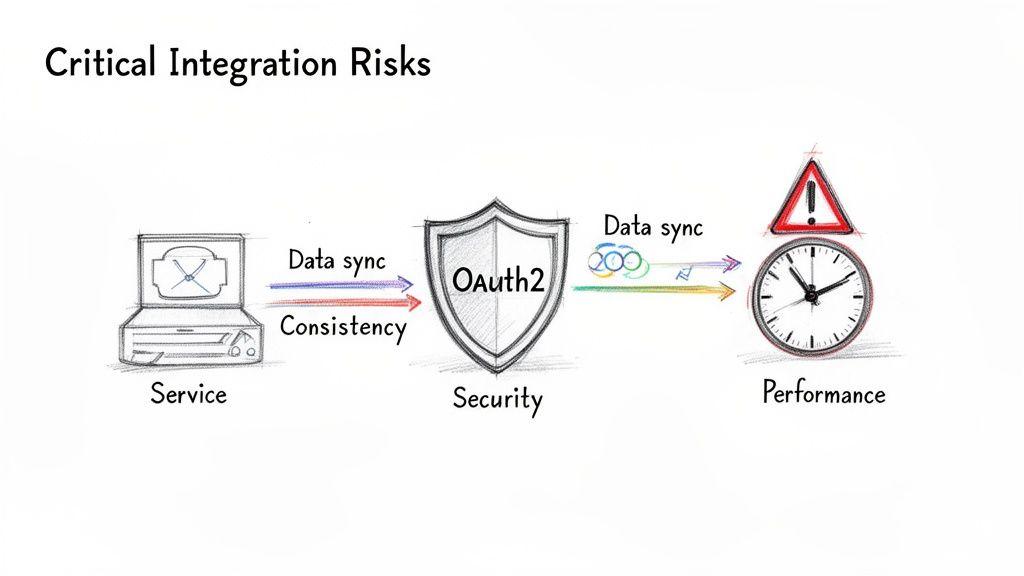

Connecting systems introduces new and complex risks. A superficial approach to IT system integration can create catastrophic security vulnerabilities, data integrity failures, and performance bottlenecks that cripple operations. For organizations subject to compliance frameworks like GDPR, NIS2, and DORA, a poorly designed integration is not just a technical failure—it is a significant business and legal liability.

The Expanded Security Attack Surface

Every connection between systems creates a new potential entry point for attackers. APIs, as the primary mechanism for modern integration, are prime targets if not properly secured. A poorly implemented API can expose sensitive data or allow unauthorized actions, turning a functional connection into a major security vulnerability.

Security must be an architectural consideration from the outset, not an afterthought. This means implementing robust, standard protocols for access control.

- Authentication: Verifies the identity of the requesting entity. This is typically handled with API keys for simple use cases or, more securely, with protocols like OAuth 2.0, which allows for delegated access without sharing raw credentials.

- Authorization: Confirms that an authenticated entity has the necessary permissions to perform the requested action. This involves enforcing the principle of least privilege, ensuring a service can only access the specific data and operations it absolutely requires.

Ignoring these foundational security measures is architecturally negligent. It is not a question of if a breach will happen, but when.

The Challenge of Data Consistency in Distributed Systems

When the same data entity exists in multiple systems, maintaining synchronization and accuracy is a significant challenge. For example, if a customer updates their address in a support portal but the change does not propagate to the billing system, the result is failed payments and operational disruption. This struggle to maintain a single source of truth is a core problem in integration.

In distributed systems, achieving strong, instantaneous consistency is often impractical or impossible. Therefore, architects frequently design for eventual consistency. This model acknowledges a brief, temporary delay before all systems are synchronized. The architectural challenge is to design the system to function correctly during this transient inconsistency and to implement robust data synchronization mechanisms (e.g., message queues, event sourcing) to minimize the reconciliation window. A solid strategy for disaster recovery in the cloud is also crucial for data resilience.

Performance Bottlenecks and Cascading Failures

A poorly designed integration can degrade the performance of the entire technology stack. If one service in an integrated chain experiences high latency, it creates a ripple effect, slowing down every dependent application. A single slow API can degrade the user experience across an entire product.

A more severe risk is cascading failures, where the failure of one component triggers a domino effect that takes down dependent systems. For example, the failure of a centralized authentication service could lock users out of every application that relies on it.

Mitigating these performance and reliability risks requires deliberate architectural patterns:

- Timeouts: Ensure that a call to a slow or unresponsive service does not block the requesting application indefinitely.

- Circuit Breakers: Automatically halt requests to a failing service for a defined period, allowing it to recover and preventing the failure from propagating.

- Asynchronous Communication: Utilize tools like message queues to decouple systems, allowing them to communicate without requiring an immediate response. This makes the overall architecture more resilient and responsive.

An Agile Roadmap for Implementation

Successful IT system integration projects are rarely delivered using a rigid, “big bang” waterfall methodology. The inherent complexity and risk are too high. A pragmatic, agile roadmap that prioritizes incremental delivery, continuous feedback, and risk reduction is a more effective approach. This framework guides a complex technical project from initial concept to a reliable, maintainable system.

Phase 1: Discovery and Architectural Design

This initial phase is dedicated to deep analysis. Before writing any code, it is essential to understand the systems involved, the data that must flow between them, and the business logic governing those flows. Building without this clarity is a recipe for failure. The outputs of this discovery directly inform the architectural design, where foundational decisions are made about integration patterns, technology stacks, and security implementation.

Key activities include:

- System Mapping: Documenting each system to be integrated, including its APIs, data models, and communication protocols.

- Data Flow Diagramming: Creating clear visual representations of how data will move, where transformations occur, and what triggers each process.

- Security Threat Modeling: Proactively identifying potential vulnerabilities in the proposed architecture and defining mitigation strategies, such as the enforcement of OAuth 2.0.

- Pattern Selection: Choosing the appropriate architectural blueprint (e.g., API-led, event-driven) based on requirements for scalability, latency, and resilience.

Phase 2: Incremental Delivery and Comprehensive Testing

With a solid architectural plan, the focus shifts to execution. Rather than attempting to build the entire solution at once, the project is delivered in small, iterative sprints. Each sprint focuses on developing and deploying a discrete piece of functionality, such as synchronizing customer data between two systems.

This iterative cycle provides rapid feedback, allowing the team to adapt and refine the solution as they learn more. This approach is best supported by strong automation, as detailed in our guide to building a modern CI/CD pipeline.

Each increment must be validated by a rigorous testing strategy:

- Unit and Integration Tests: Verify that individual components and their direct connections function as expected.

- End-to-End (E2E) Testing: Simulate real-world user workflows to ensure the entire integrated process operates correctly.

- Performance and Load Testing: Stress the system to identify and resolve bottlenecks before they impact users in production.

A common failure pattern is deferring comprehensive testing until the end of the project. This is a recipe for disaster, as it ensures that deep-seated architectural flaws are only discovered when they are most expensive and difficult to fix.

Phase 3: Ongoing Monitoring and Maintenance

An integration is not “done” at deployment. That is the beginning of its operational life. The real work is establishing robust monitoring and maintenance routines to ensure the system remains healthy, performant, and secure over the long term. This practice is known as observability and is built on three pillars:

- Logging: Capturing detailed, structured logs from every component to trace events and diagnose errors.

- Metrics: Collecting key performance indicators (e.g., API response times, error rates, queue depths) to provide a real-time view of system health.

- Tracing: Using distributed tracing to follow a single request as it propagates through multiple services, making it possible to pinpoint the exact source of latency or failure.

This continuous oversight enables a shift from a reactive “break-fix” model to a proactive one, where potential issues are identified and addressed before they escalate into major problems.

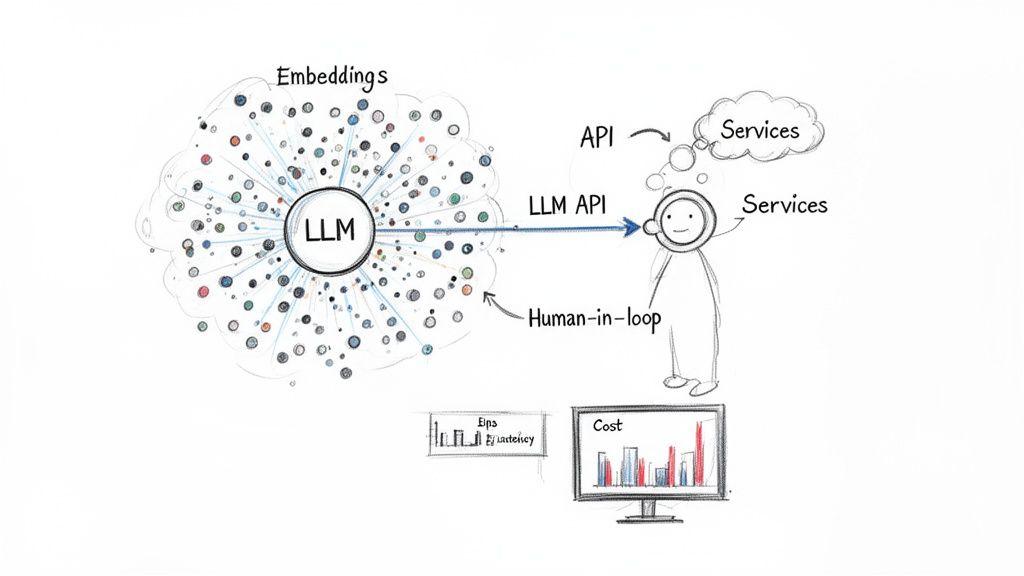

Integrating AI Systems: The New Architectural Frontier

Integrating AI, particularly Large Language Models (LLMs), introduces a layer of complexity not addressed by traditional IT system integration playbooks. While connecting to an LLM via an API is straightforward, the primary engineering challenge lies in building the surrounding architecture required to make these systems reliable, secure, and cost-effective. This involves orchestrating systems that are inherently non-deterministic.

Orchestrating Agentic Workflows

Many advanced AI applications rely on agentic workflows, where an AI model uses external tools (e.g., querying a database, calling another API) to complete a complex task. This requires a sophisticated orchestration layer to manage multi-step processes, handle tool-calling logic, and recover from failures. Unlike a standard microservice with predictable responses, an LLM-powered agent can produce unexpected outputs or fail in novel ways. A simple integration that merely forwards requests to a model is brittle by design.

The Necessity for Robust Guardrails

Because LLM outputs are probabilistic and generative, they are inherently unpredictable. Guardrails are essential safety mechanisms that govern model behavior. These are critical integration components, not optional features.

Guardrails are designed to:

- Prevent Harmful Content: Block inappropriate, biased, or unsafe responses.

- Enforce Factual Accuracy: Validate outputs against trusted data sources to mitigate “hallucinations.”

- Maintain Brand Voice: Ensure the model’s tone and style align with company guidelines.

- Protect Sensitive Data: Prevent the model from leaking personally identifiable information (PII) or other private data.

An AI integration without guardrails is an unmanaged liability. It exposes the business to the full spectrum of the model’s potential failures without any architectural safety net.

This need for secure, advanced infrastructure is reflected in market data. The global system integration market is projected to reach $1,289.22 billion by 2032, driven largely by the need to unify IT systems for improved agility and security—a trend that directly supports the integration of complex AI systems. You can explore more detailed market insights from SkyQuest.

AI Observability and Human Oversight

AI systems require a specialized form of monitoring known as AI observability. This extends beyond standard performance metrics to track factors unique to generative models, which is essential for maintaining both reliability and cost-effectiveness.

Key metrics to track include:

- Cost Management: Monitor token usage per API call to prevent budget overruns.

- Performance Monitoring: Measure latency and implement fallback strategies for when a primary model is slow or unavailable.

- Reliability Engineering: Use caching to reduce costs and rate limiting to manage API quotas.

Finally, for high-stakes decisions, automation must be balanced with human judgment. Implementing human-in-the-loop (HITL) controls is a critical integration pattern. HITL ensures that for crucial tasks—such as authorizing a large financial transaction or approving a medical diagnosis—a human expert validates the AI’s recommendation before it is executed. This pragmatic approach blends the speed of automation with the accountability of human oversight.

Conclusion: Key Takeaways for Technical Leaders

Turning integration theory into a reliable, maintainable system that drives business value is a significant engineering challenge. Success is not achieved through a single, large-scale launch but through a deliberate, iterative process guided by sound architectural principles.

Core Principles for a Sound Integration Strategy

A robust integration strategy is founded on four key principles that help avoid the common pitfalls of brittle systems and escalating maintenance costs.

- Start with the Business Problem: Integration must serve a clear purpose, such as automating a critical workflow or creating a unified customer view. Avoid a technology-first approach where the tool is selected before the problem is fully defined.

- Architect for Maintainability: The fastest solution today often creates the largest technical debt tomorrow. Prioritize integration patterns like API-led connectivity that promote decoupling and reusability, even if they require more upfront investment.

- Embed Security and Privacy by Design: Security is not a feature to be added later. It must be a foundational part of the architecture, encompassing authentication, authorization, and data protection to comply with frameworks like GDPR and prevent costly breaches.

- Deliver Incrementally: Build, test, and deploy integrations in small, manageable increments. This agile methodology reduces risk, accelerates feedback loops, and allows the architecture to evolve without requiring complete rebuilds.

The system integrator market is forecast to grow from $595 billion in 2026 to $1,900 billion by 2033. This growth reflects the immense demand for partners who can build robust, maintainable solutions, particularly in complex domains like AI. You can explore more on the system integrator market growth here.

For founders and CTOs, the path forward is clear: treat integration as a core architectural competency. Before starting a new project, conduct a thorough discovery phase to map data flows and define business objectives. This disciplined approach is the foundation for building a connected digital ecosystem that provides lasting value.

Frequently Asked Questions About System Integration

How do you calculate the ROI of an integration project?

Calculating the Return on Investment for an integration project requires quantifying business outcomes beyond initial development costs. A proper ROI analysis should include:

- Labor Cost Savings: Measure the hours reclaimed by automating manual data entry and workflows, multiplied by the cost of that labor.

- Error Reduction Savings: Quantify the financial impact of errors caused by out-of-sync data, such as incorrect invoices, shipping mistakes, or compliance penalties.

- Process Velocity Gains: Measure the reduction in cycle time for core business processes (e.g., order-to-cash). Faster cycles often translate to improved cash flow and customer satisfaction.

The true ROI is a combination of these quantitative savings and the strategic value of increased agility and data reliability.

What is the difference between an API and an integration?

An API (Application Programming Interface) is a tool; an integration is the functional system built using that tool.

Think of an API as a standardized contract that defines how one piece of software can request services or data from another. It is the set of rules for communication.

An integration is the complete, end-to-end process that uses one or more APIs to connect systems and automate a workflow. For example, a single “new customer onboarding” integration might use APIs from a CRM, a billing platform, and an email marketing tool to create a seamless automated process.

Should I build a custom integration or use an iPaaS solution?

The choice between a custom solution and an Integration Platform as a Service (iPaaS) depends on your specific requirements, team capabilities, and long-term strategy.

- Build Custom: This approach is suitable for highly specialized workflows, complex data transformations, or when absolute control over performance, security, and the data path is required. It offers maximum flexibility but demands significant engineering resources for development and ongoing maintenance.

- Use iPaaS: An iPaaS solution (e.g., MuleSoft, Zapier) excels at connecting common SaaS platforms with pre-built connectors and visual workflow builders. It accelerates development for standard use cases but can introduce limitations in flexibility, control, and cost at scale.

At Devisia, we specialize in engineering robust, maintainable custom integrations that align with your core business logic and architectural principles. We believe that critical integrations should be treated as first-class products, designed for resilience and long-term value.

If you are ready to move from fragmented systems to a unified digital architecture, let’s discuss your requirements. Learn more about our approach at https://www.devisia.pro.