The choice between cloud computing and on-premise infrastructure pivots on a single, critical question: do you own and manage physical hardware, or do you consume computing as a service? The answer dictates where your engineering team invests its time—maintaining servers or building products. For founders, CTOs, and compliance managers, this is not merely a technical decision; it’s a strategic one with long-term implications for cost, security, and agility.

The Fundamental Choice: Infrastructure Ownership vs. Service Consumption

For decades, deploying software followed a rigid playbook: purchase servers, networking gear, and storage, then house them in a data centre or an on-premise server room. This is the classic on-premise model, defined by significant upfront Capital Expenditure (CapEx). The business bears the entire cost of procuring and maintaining physical hardware before a single line of code is deployed.

The primary problem with this ownership model is its inherent rigidity. It forces engineering teams to predict capacity needs years in advance—a nearly impossible task for a growing business or a product with uncertain demand. Over-provisioning wastes capital on idle hardware. Under-provisioning creates performance bottlenecks that directly impact users and revenue.

Furthermore, a dedicated IT team is consumed by operational burdens:

- Hardware procurement, installation, and replacement cycles.

- Operating system patching and software updates.

- Managing power, cooling, and physical data centre security.

- Designing, implementing, and testing disaster recovery plans.

This maintenance cycle consumes valuable engineering resources that could otherwise be allocated to innovation and product development.

The Shift to Service Consumption

Cloud computing inverts this model. Instead of owning hardware, you consume computing resources as a utility from providers like AWS, Azure, or Google Cloud. This immediately shifts the financial model from CapEx to Operational Expenditure (OpEx), creating a pay-as-you-go structure that aligns costs directly with actual usage.

This strategic pivot from infrastructure ownership to service consumption is the central trade-off. It exchanges absolute control over the physical stack for operational agility and accelerated development cycles.

This service-oriented model is typically offered in three layers, each abstracting away different levels of management responsibility.

| Service Model | Description | Managed By Provider | Managed By You |

|---|---|---|---|

| IaaS (Infrastructure as a Service) | Foundational building blocks: virtual servers, storage, networking. | Physical data centre, servers, networking, and storage virtualisation. | Operating system, middleware, applications, and data. |

| PaaS (Platform as a Service) | A managed platform for building and deploying applications without managing the underlying infrastructure. | Everything in IaaS, plus the OS, middleware, and runtime. | Applications and data. |

| SaaS (Software as a Service) | Ready-to-use software delivered over the internet, typically via subscription. | The entire stack, including the application itself. | User access and data configuration within the application. |

Comparing Cloud and On-Premise Across Critical Pillars

The decision between cloud and on-premise is not about finding a universally “best” solution. It is a strategic exercise in evaluating trade-offs. For technical leadership, the choice hinges on a pragmatic comparison across the dimensions that directly impact operations, budget, and risk profile: Total Cost of Ownership (TCO), security, performance, scalability, and maintenance.

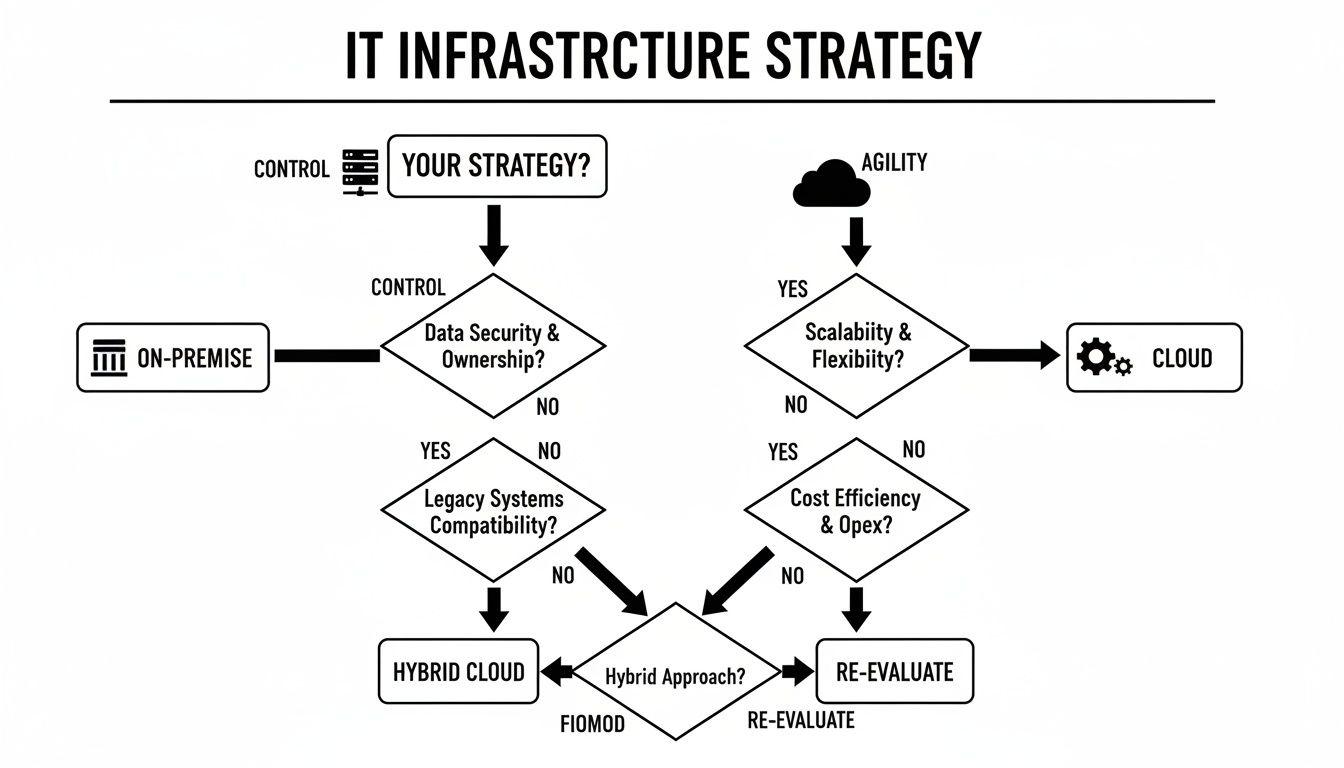

A balanced evaluation acknowledges that neither model is inherently superior. The optimal choice is contextual, driven by specific business requirements, regulatory constraints, and technical realities. This decision tree provides a high-level framework for navigating this strategic choice.

The key insight is that the infrastructure choice is a direct reflection of business strategy—whether optimizing for predictable workloads with maximum control or pursuing rapid market entry with maximum agility.

Total Cost of Ownership (TCO)

The most immediate difference is financial. On-premise is dominated by upfront Capital Expenditure (CapEx)—the purchase of servers, storage, networking hardware, and software licenses, plus the costs of physical space, power, and cooling.

Cloud operates on an Operational Expenditure (OpEx) model. You pay a recurring fee based on consumption, avoiding large initial investments. This pay-as-you-go approach provides crucial financial flexibility, particularly for startups and SMBs seeking to preserve capital.

However, a simple CapEx vs. OpEx comparison is superficial. A robust TCO analysis must account for hidden costs. For on-premise, these include:

- Staffing Costs: Salaries for the IT team required to manage, maintain, and secure the physical infrastructure.

- Maintenance Contracts: Ongoing fees for hardware support and software updates.

- Upgrade Cycles: The recurring CapEx needed to replace aging hardware every few years.

Cloud TCO has its own complexities:

- Data Egress Fees: Charges for transferring data out of the cloud can be significant, especially for data-intensive applications.

- Resource Sprawl: The ease of provisioning can lead to underutilized or forgotten resources, causing unforeseen cost increases.

- Managed Service Premiums: The convenience of PaaS offerings comes at a cost premium over self-managing the same services on basic virtual machines.

The industry trend is clear: public cloud spending is projected to grow from under 17% of enterprise IT spending in 2021 to over 45% by 2026. This reflects a broad recognition of the cloud’s operational advantages. For SMBs, 63% of workloads and 62% of data are already in the cloud, freeing up IT teams to focus on product engineering rather than infrastructure maintenance. You can find more on these cloud adoption trends from Spacelift.

Security and Compliance

Security is not an inherent feature of either model; it is a function of implementation and governance. On-premise infrastructure provides the potential for absolute control. Your team owns the entire security stack, from physical data centre access to firewall configurations and OS hardening.

This total control translates to total responsibility. The burden of securing the infrastructure and meeting compliance mandates like GDPR, DORA, or HIPAA rests entirely on your internal team. While this enables a highly customized security posture, it requires deep in-house expertise and continuous vigilance.

The core security trade-off is between the absolute control of an on-premise environment and the specialized, at-scale security infrastructure of a major cloud provider.

Cloud security operates on a shared responsibility model. The provider is responsible for the security of the cloud (physical data centres, hardware, core networking). You are responsible for security in the cloud (application configuration, access management, data encryption, network rules). A misconfigured cloud environment can be significantly more exposed than a well-architected on-premise one.

Performance and Reliability

For certain applications, physical proximity is non-negotiable. On-premise excels in scenarios demanding ultra-low latency, such as high-frequency trading, industrial automation, or real-time manufacturing systems. You can architect the network and hardware for optimal performance without the constraints of a cloud provider’s regional data centre topology.

Conversely, cloud providers offer globally distributed networks with built-in redundancy. They deliver high reliability and uptime through features like availability zones and automated failover—capabilities that are prohibitively expensive and complex to replicate on-premise. The key is to design applications to leverage these distributed features correctly.

Scalability and Elasticity

Scalability is a primary driver of cloud adoption. The ability to provision and de-provision resources on demand—elasticity—is essential for applications with unpredictable traffic, such as an e-commerce platform during a sales event or a rapidly growing SaaS product.

Scaling on-premise is a slow, capital-intensive process. It involves procuring, installing, and configuring new hardware, which can take weeks or months. This makes it ill-suited for handling sudden demand spikes and often leads to over-provisioning—purchasing excess capacity “just in case,” which results in wasted capital.

Maintenance and Operations

With an on-premise architecture, your IT team is responsible for the entire operational lifecycle: hardware repairs, firmware updates, OS patching, and physical environment management. This operational overhead consumes engineering resources that could be dedicated to building new features.

In the cloud, the provider manages this “undifferentiated heavy lifting.” Your team is liberated from physical hardware management, shifting its focus to higher-value activities like Infrastructure-as-Code (IaC), automated deployments, and performance optimization, fostering a more agile DevOps culture.

Cloud vs. On-Premise Strategic Decision Matrix

This table summarizes the core differences across key business and technical dimensions. Use it to map your company’s critical priorities to the most suitable infrastructure model.

| Dimension | Cloud Computing (As-a-Service) | On-Premise (Self-Hosted) |

|---|---|---|

| Cost Model | OpEx (Operational Expenditure): Pay-as-you-go, subscription-based. | CapEx (Capital Expenditure): Large upfront investment in hardware/software. |

| Scalability | High Elasticity: Scale resources up or down on demand in minutes. | Limited & Slow: Requires manual hardware procurement and setup. |

| Control | Shared Control: You control the OS/app; provider controls the hardware. | Total Control: You own and manage the entire infrastructure stack. |

| Security | Shared Responsibility Model: Provider secures the cloud; you secure what’s in it. | Total Responsibility: You are liable for all security, physical and digital. |

| Maintenance | Provider-Managed: All hardware and underlying infrastructure maintenance is handled. | Self-Managed: Your team is responsible for all maintenance, repairs, and updates. |

| Performance | Globally Distributed: High reliability but latency depends on architecture/region. | Location-Specific: Potentially lower latency for local users/systems. |

| Ideal Use Case | Startups, variable workloads, fast-growing applications, DevOps environments. | Highly regulated industries, predictable workloads, ultra-low latency needs. |

This matrix highlights the fundamental trade-off: cloud offers agility and reduces operational burden at the cost of some control, while on-premise provides maximum control and performance customization at the cost of higher upfront investment and management overhead.

When to Choose an On-Premise Architecture

Despite the prevalence of cloud-first strategies, choosing an on-premise architecture is often a deliberate, pragmatic decision. For certain high-stakes scenarios, it remains the most secure and reliable option. This choice is driven by non-negotiable requirements that the public cloud’s shared infrastructure model cannot adequately address, particularly concerning performance, security, and data residency.

Scenarios Demanding Ultra-Low Latency

Physical proximity is a performance variable that cannot be abstracted away. For systems where millisecond-level latency determines success or failure, the distributed nature of the cloud introduces unacceptable delays.

Consider these use cases:

- High-Frequency Trading (HFT): Financial firms require servers to be physically co-located in the same data centres as stock exchanges. A few milliseconds of network latency can result in significant financial losses.

- Industrial IoT and Manufacturing: Automated robotics and real-time process controls on a factory floor require instantaneous feedback loops. An internet outage or network latency could halt production entirely.

- Real-Time Medical Imaging: In a surgical setting, robotic assistance or live diagnostic streams demand zero lag. Predictable, instantaneous system response is a matter of patient safety, which on-premise hardware ensures.

Navigating Stringent Data Sovereignty and Compliance

For businesses in regulated industries, data governance is a foundational requirement. Maintaining sensitive data within a physically controlled, auditable location is often the most straightforward way to comply with mandates like GDPR, DORA, or HIPAA.

When compliance requires absolute certainty over data locality and physical access, an on-premise architecture eliminates the ambiguities of the cloud’s shared responsibility model. You’re trading convenience for certainty.

This is particularly critical for government agencies, defence contractors, and financial institutions. Demonstrating compliance becomes a matter of showing control over your own server racks, access logs, and network perimeters, rather than relying on a third-party provider’s attestations.

Managing Integrated Legacy Systems and Predictable Costs

Many established enterprises rely on deeply integrated legacy systems that form the backbone of their operations. A full-scale cloud migration for these systems is often a high-risk, multi-year project with an uncertain ROI.

In these cases, maintaining the on-premise environment is the pragmatic approach. The known stability and performance of the existing setup outweigh the potential benefits of a risky migration. This strategy is explored further in our guide to Kaizen projects for maintaining existing systems.

On-premise can also offer a lower Total Cost of Ownership (TCO) for workloads with predictable, high-volume resource demands. If an application runs at a steady, intensive pace 24/7, a one-time capital expenditure (CapEx) on hardware can be more cost-effective over the long term than paying perpetual operational expenses (OpEx) to a cloud provider. This model also provides protection from unpredictable costs like data egress fees, which can escalate quickly with data-heavy analytics or backup operations.

When to Choose a Cloud Architecture

If speed, agility, and market responsiveness are primary business drivers, a cloud architecture is almost always the correct choice. While on-premise excels in scenarios requiring absolute control, the cloud has become the default for modern software development where adaptability is a key competitive advantage.

The decision to build cloud-native is a strategic one, aimed at accelerating innovation by removing the friction of managing physical infrastructure.

The pay-as-you-go financial model transforms what would be a significant upfront capital expense (CapEx) into a predictable operational expense (OpEx). This allows a lean team to test a business idea, pivot based on user feedback, and scale resources precisely as needed, without investing heavily in hardware that may become underutilized or obsolete.

Architecting for Unpredictable Demand

The cloud’s elasticity—the ability to automatically scale resources in response to real-time demand—is a critical architectural enabler. It is essential for any application with variable workloads, where an on-premise solution would force a choice between wasting capital on idle capacity or risking service failure under load.

Consider these scenarios:

- SaaS Product Growth: As a new SaaS platform gains traction, compute and database resources must scale seamlessly to maintain a positive user experience. The cloud enables automated policies to add or remove servers based on metrics like CPU utilization or request latency.

- E-commerce Traffic Spikes: An online retailer preparing for a Black Friday sale can provision massive server capacity for just a few days or hours and then scale back down. This “bursting” capability is not financially or operationally feasible with on-premise hardware.

- Data Processing Workloads: A business intelligence team can spin up a powerful compute cluster for a few hours each day to run heavy analytics jobs and then shut it down, paying only for the time it was active.

For any system where user demand is not a flat, predictable line, the cloud’s elastic scalability is a core architectural enabler. It shifts the problem from “How much hardware should we buy?” to “What are the rules for our auto-scaling group?”

Accelerating Development with Managed Services

The true value of a cloud architecture extends beyond IaaS to managed platform services (PaaS). Offerings like serverless functions (AWS Lambda) or managed databases (Azure SQL) abstract away significant operational complexity behind a simple API. This allows engineering teams to focus on building features that deliver customer value.

Instead of spending weeks configuring, securing, and patching a database cluster, a team can provision a production-ready, highly available database in minutes. This dramatically reduces the time-to-market for new products and features.

By offloading undifferentiated heavy lifting to the cloud provider, engineering talent is freed to solve core business problems. This operational efficiency creates a powerful competitive advantage, enabling faster iteration and greater market responsiveness. The trade-off is a degree of vendor lock-in, but for most organizations, the gain in development velocity is a worthwhile exchange.

Implementing a Pragmatic Hybrid Cloud Strategy

For many established organizations, the cloud vs. on-premise debate is not a binary choice. A hybrid model, which blends the stability of existing on-premise systems with the agility of the cloud, is often the most pragmatic path forward. This approach allows for modernization without the risk and expense of a complete overhaul.

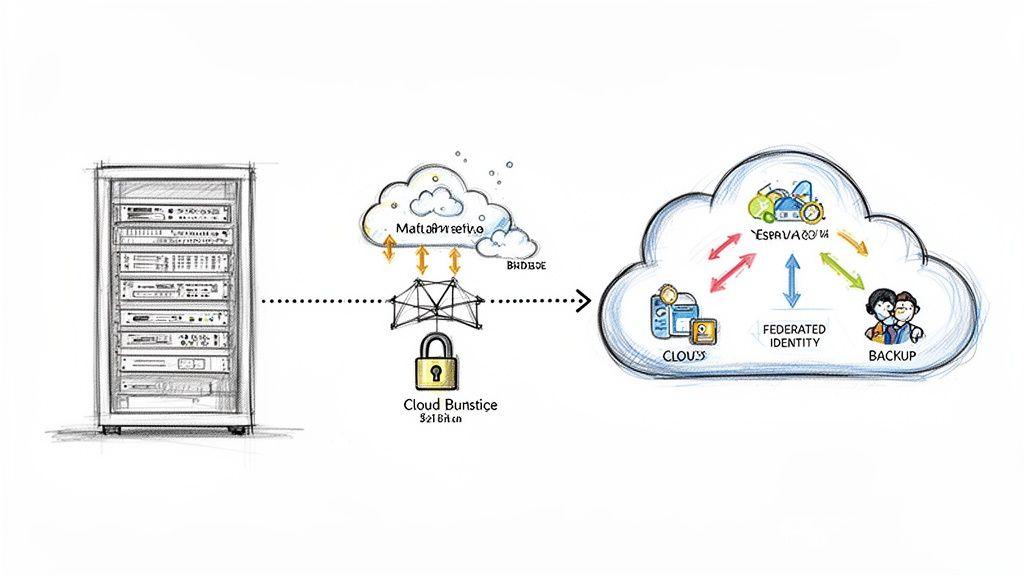

A hybrid architecture is a deliberate, long-term strategy. It enables a business to retain sensitive data or core legacy applications securely on-premise while leveraging the public cloud for its distinct advantages in scalability, development agility, and disaster recovery.

This model unlocks practical architectural patterns that solve specific, real-world business problems.

Common Hybrid Cloud Patterns

A hybrid strategy enables targeted, high-impact improvements through several well-established patterns, each addressing a distinct operational challenge.

- Cloud Bursting: The public cloud serves as an overflow for on-premise infrastructure. When traffic surges, workloads automatically extend to cloud resources. This provides elasticity to handle peak demand without investing in on-premise hardware that remains idle most of the time.

- Disaster Recovery (DR): Building and maintaining a secondary on-premise DR site is often prohibitively expensive. A hybrid model offers a cost-effective alternative where the cloud serves as the failover environment. Critical data and applications are replicated to the cloud, ready for activation if the primary site fails.

- Tiered Application Architecture: New, cloud-native applications are developed in the public cloud while integrating securely with core on-premise systems of record, such as a customer database or ERP. This allows for rapid innovation at the user-facing layer without disrupting mission-critical backend systems. Executing this requires robust automation, making a well-defined CI/CD pipeline essential for managing deployments across both environments.

Implementation Complexities and Considerations

A naive approach to hybrid cloud introduces significant risk. Successfully bridging two distinct environments requires careful architectural planning to manage complexity and ensure consistent governance.

A hybrid cloud is not simply having both on-premise and cloud resources; it is about creating a cohesive, secure, and manageable fabric that spans both. The real work lies in the integration, not the procurement.

Key challenges to address include:

- Secure Network Connectivity: A reliable, secure link is non-negotiable. Options like a dedicated private connection (e.g., AWS Direct Connect or Azure ExpressRoute) or a site-to-site VPN are necessary to ensure data can move between environments without traversing the public internet.

- Federated Identity Management: A unified identity and access management (IAM) system is critical. Users should have a single identity that grants appropriate permissions across both on-premise and cloud resources, preventing security gaps and simplifying administration.

- Consistent Security Policies: Security policies, monitoring, and threat detection must be applied consistently across both environments. A Security Information and Event Management (SIEM) system capable of ingesting logs from all resources is essential for maintaining a unified security posture.

How Your Infrastructure Choice Impacts AI Workloads

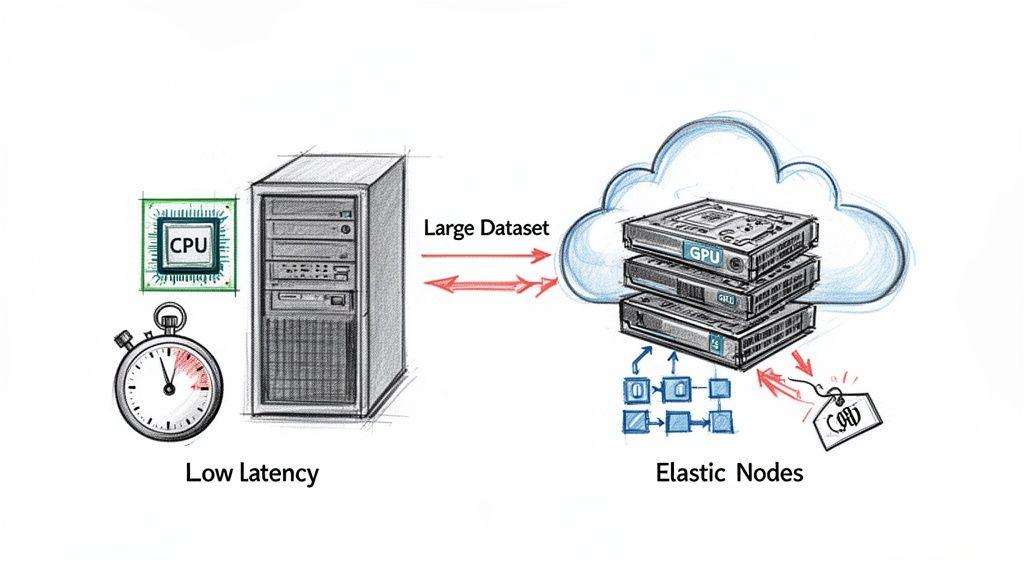

The infrastructure decision for AI workloads directly impacts access to specialized hardware, development velocity, and the economic viability of your AI strategy. The primary challenge for most organizations is procuring and managing the necessary hardware.

An on-premise approach to AI development immediately encounters a significant capital expenditure barrier. Specialized hardware, particularly the powerful GPUs required for model training, is expensive to purchase and complex to maintain. This model forces a high-stakes prediction of future needs, risking either over-investment in rapidly depreciating assets or under-provisioning that stifles innovation.

Cloud as an AI Accelerator

Cloud platforms directly address the hardware procurement problem by offering on-demand access to a vast, constantly updated inventory of GPUs, TPUs, and other AI accelerators. This transforms a large CapEx challenge into a manageable OpEx model, allowing teams to experiment with different hardware configurations and scale resources based on the specific requirements of each training or inference job.

The killer architectural advantage of the cloud for AI is that it decoupples experimentation from procurement. Your team can fire up state-of-the-art hardware for a few hours, not a few years, which radically lowers the barrier to entry for building serious models.

Major cloud providers also offer managed AI platforms that abstract away much of the underlying infrastructure complexity. These services provide pre-configured environments and tools covering the entire machine learning lifecycle—from data preparation and training to deployment and monitoring. This allows engineers to focus on model improvement rather than server management.

Crucial Trade-offs and On-Premise Strengths

However, the cloud is not a universal solution for all AI workloads. The on-demand convenience comes at a cost. High-performance cloud instances are expensive, and intensive training cycles can lead to escalating costs. Furthermore, data egress fees can become a significant, often overlooked, expense when working with large datasets. Transferring terabytes of data out of the cloud can result in substantial and unexpected bills.

This is where an on-premise setup retains strategic value for specific AI use cases:

- Sensitive Data Governance: When training models on highly sensitive data subject to strict regulations like GDPR, maintaining both data and models within a physically secure, auditable on-premise environment is the most direct path to compliance. It minimizes data residency risks and simplifies audits. Our AI Risk & Privacy Checklist provides a structured framework for this evaluation.

- Edge Inference and Low Latency: For AI applications where millisecond-level response time is critical—such as industrial robotics or real-time video analysis—deploying inference models on-premise, adjacent to the data source, is essential. It guarantees the ultra-low latency that a round-trip to a remote cloud data centre cannot provide. In these scenarios, the comparison of cloud computing vs on-premise is heavily skewed toward local processing.

Frequently Asked Questions

Which Is More Secure: Cloud Or On-Premise?

Neither model is inherently more secure; security depends entirely on implementation and governance.

On-premise provides absolute control, which also means absolute responsibility for all security layers—from physical data centre access and network hardware to software patching and intrusion detection.

Cloud providers manage world-class infrastructure security, but security operates on a shared responsibility model. You remain responsible for correctly configuring applications, access controls, and data security. A misconfigured cloud environment can be far less secure than a well-managed on-premise one.

Can I Migrate From On-Premise To The Cloud Later?

Yes, migrating from on-premise to the cloud is a common strategy, but it is rarely a simple “lift-and-shift” operation. It is a significant transformation that requires meticulous planning.

A successful migration involves a thorough application assessment, potential refactoring or re-architecting, a detailed data transfer plan, and rigorous security and compliance checks. For many organizations, a hybrid model serves as a practical, less disruptive intermediate step.

How Do Costs Truly Compare Over A Five-Year Period?

A five-year cost analysis is complex and extends beyond a simple OpEx vs. CapEx comparison. On-premise requires a large upfront CapEx followed by ongoing OpEx for power, cooling, hardware refreshes, and specialized staff.

Cloud eliminates the initial CapEx but introduces a variable OpEx that scales with usage. For businesses with highly predictable and stable workloads, on-premise can achieve a lower total cost of ownership (TCO).

However, this calculation must also account for intangible costs, such as the opportunity cost of slower innovation and the significant engineering overhead required to maintain and operate the infrastructure yourself.

Ready to build a reliable, scalable system without the guesswork? Devisia specialises in pragmatic architecture and custom software development that delivers measurable business value. Let’s build your next digital product together.