A code of conduct is more than a legal or HR document. For a technology company, it must serve as an operational blueprint for how software and AI systems are designed, built, and maintained. It translates abstract ethical principles into concrete architectural and engineering directives.

Why a Generic Code of Conduct is Inadequate for Tech Companies

In many organizations, the code of conduct is drafted by legal and HR departments. It focuses on workplace etiquette and general business principles. While necessary, this approach is insufficient for companies building scalable SaaS platforms, integrating AI features, and managing complex data pipelines.

The most significant risks in modern digital products—from data breaches to algorithmic bias—do not originate from interpersonal conflicts but from technical and architectural decisions. A generic code of conduct fails to address the root cause of these risks.

A tech-specific code of conduct bridges this gap. It provides an operational framework for engineering and product teams, translating abstract values into tangible technical guardrails. Without one, critical ethical decisions are left to individual interpretation, exposing the business to significant operational and reputational risks.

The Problem with Generic Templates

Generic templates offer no guidance on the critical questions engineers face daily. A standard code might state, “respect customer privacy,” but a tech-specific code mandates precise actions and forces teams to confront specific trade-offs.

Consider these engineering-level questions:

- Data Minimization: Does the database schema for a new feature collect only the personal data strictly necessary for its function, or does it capture everything “just in case”?

- Algorithmic Fairness: How do we test a new recommendation engine to verify it doesn’t introduce unintentional bias against specific user segments before deployment?

- Security by Default: Are vulnerability scanning and dependency checks an automated, mandatory part of the CI/CD pipeline, or are they an ad-hoc task performed “if time allows”?

- Data Processing Transparency: When an AI model makes a decision impacting a user, can we explain the key factors that influenced the outcome?

These are not HR issues; they are engineering and architectural problems that demand an ethical framework for guidance.

The Business Risks of an Inadequate Code

A superficial code of conduct creates concrete liabilities. Reputational damage from an algorithm perceived as unfair can erode customer trust far faster than marketing can build it. More critically, regulatory scrutiny is intensifying. Non-compliance with regulations like GDPR, NIS2, or emerging AI legislation can result in substantial fines and operational disruptions.

The distinction between a generic document and an architectural guide for tech teams is fundamental. A tech-focused code of conduct doesn’t just state principles; it integrates them directly into the software development lifecycle, turning them into measurable and verifiable engineering tasks.

The following table highlights the key differences.

Standard vs. Tech-Specific code of conduct

| Component | Generic Code of Conduct | Tech & AI-Specific Code of Conduct |

|---|---|---|

| Primary Focus | Workplace behavior, general business ethics. | Design, security, privacy, and fairness principles in software. |

| Audience | All employees, from an HR/legal perspective. | Engineers, product managers, data scientists, software architects. |

| Guideline Examples | ”Do not discriminate,” “Act with integrity." | "Implement privacy by design,” “Monitor AI models for performance drift.” |

| Risk Mitigated | People-related legal and reputational risks. | Operational, compliance, and product risks (e.g., bias, data breaches). |

| Practical Application | HR policies, annual training modules. | Architecture reviews, code review checklists, pre-release gates. |

A tech-specific code shifts the focus from interpersonal behaviors to the technical decisions that have a large-scale impact on users and the business.

In Italy, the need for robust ethical frameworks was accelerated by Legislative Decree 231/2001, which introduced corporate liability for specific criminal offenses. This pushed companies to adopt formal organizational models to mitigate legal and financial risks. A study on Italian corporate codes found that 60% were hybrid models, combining legal compliance with value-based commitments, demonstrating a trend toward embedding a genuine ethical culture rather than mere formal compliance. Approfondisci i risultati dello studio sui codici aziendali in Italia.

A well-architected code of conduct is not a legal burden but a strategic asset. It enforces privacy by design, reduces technical and compliance debt, and serves as a public commitment to responsible innovation—a compelling business case for founders and CTOs.

Ultimately, treating the code of conduct as a technical blueprint helps build more robust, secure, and trustworthy products, which is the foundation for long-term success.

Defining Actionable Principles for Development and AI Teams

An effective code of conduct for a tech company must move beyond high-level statements like “act with integrity.” Its power lies in translating broad ethics into concrete, measurable principles that engineering and AI teams can implement, debate, and verify. Without this step, the code remains disconnected from the daily technical trade-offs that shape your products.

The objective is to establish foundational, non-negotiable commitments that guide everything from architectural decisions to AI model development. These principles become the shared language for the technical organization, ensuring every engineer, data scientist, and product manager aligns on what responsible software development entails.

These principles should not be handed down as mandates. The most effective approach is for CTOs to lead workshops where teams co-create them, ensuring relevance to real-world challenges. When teams participate in building the code, they own it, making it a living part of the engineering culture rather than another document to be ignored.

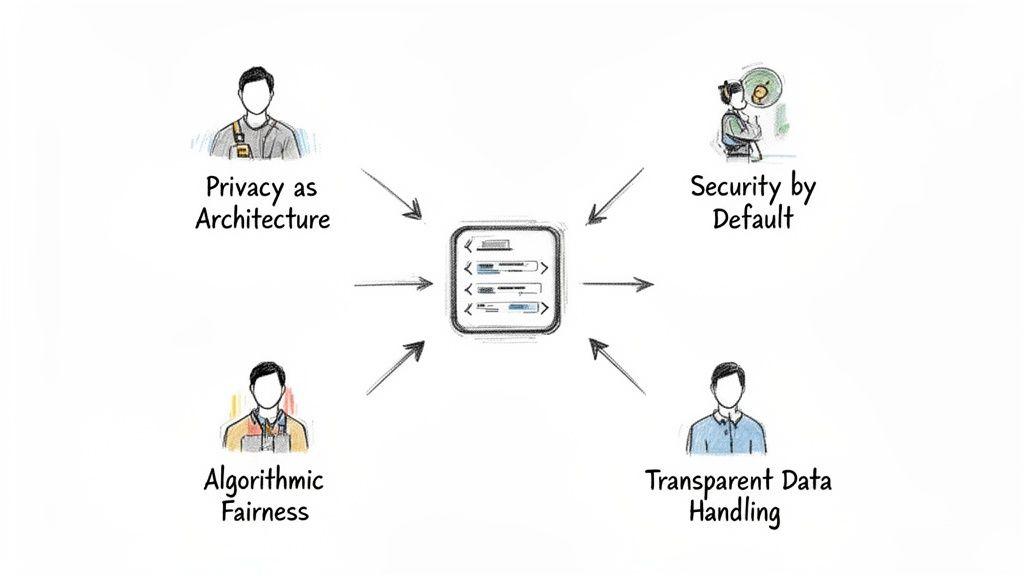

From Abstract Values to Engineering Commitments

Let’s break down four core principles into actionable language that teams can implement. Each one deliberately shifts the focus from a vague ideal to a specific, technical commitment.

Privacy as an Architectural Choice

This principle reframes privacy not as a feature or a compliance checkbox, but as a fundamental architectural constraint, as critical as scalability or performance. It acknowledges that the most effective privacy protections are designed from the start, not added as an afterthought.

- Sample Language: “We treat user privacy as a core system requirement. Every new feature, service, and data schema will be designed to minimize data collection to what is strictly necessary for its defined purpose (data minimisation). We will prefer architectures that process data locally or in a decentralized manner wherever feasible.”

- Technical Impact: This language forces teams to justify every data point collected and directly influences database design. It promotes the evaluation of technologies like federated learning or on-device processing over defaulting to centralized data lakes.

Security by Default

This principle establishes security as the baseline, not an add-on. It shifts the burden from individual engineers remembering to be secure to building systems where the default state is the secure state.

- Sample Language: “Our systems will be secure by default. Access controls are deny-all unless explicitly granted, all data in transit and at rest is encrypted, and automated security scanning is a mandatory, non-skippable step in our CI/CD pipelines. We commit to a culture of proactive vulnerability management.”

- Technical Impact: This translates directly into implementing strict IAM policies, automating dependency scanning with tools like Snyk or Dependabot, and ensuring the most secure coding libraries are the easiest for developers to use.

A principle is only as strong as its implementation. Stating “we value security” is meaningless. Mandating that a pull request cannot be merged if automated security scans fail is a principle with tangible impact.

Algorithmic Fairness and Bias Mitigation

For any company building AI systems, this is non-negotiable. It moves beyond the impossible goal of creating perfectly “unbiased” models to establishing a transparent, repeatable process for identifying, measuring, and mitigating harmful biases.

- Sample Language: “We commit to building AI systems that are as fair and equitable as possible. Before deployment, all models impacting users will undergo a documented bias assessment against predefined fairness metrics relevant to the use case. We will document data sources, limitations, and mitigation strategies, and establish monitoring for performance degradation and fairness drift post-deployment.”

- Technical Impact: This requires teams to adopt specific tools and workflows. They must use frameworks to analyze datasets for representation gaps, define clear fairness metrics (e.g., demographic parity, equal opportunity), and build model monitoring dashboards that track fairness alongside accuracy. To help structure these assessments, teams can start with our free AI Risk & Privacy Checklist.

Transparent Data Handling

This principle is about building trust by ensuring that how your system uses data is understandable—not just to auditors, but to the teams building it and, where relevant, to end-users.

- Sample Language: “We will maintain clear and comprehensive documentation of our data pipelines, including data sources, transformations, and access controls. For AI systems, we will prioritize model explainability techniques to understand and, where possible, articulate the key drivers of their decisions. Users will be given clear information about how their data is used to power our services.”

- Technical Impact: In practice, this means investing in data governance tools, maintaining data lineage records, and integrating explainability libraries (like SHAP) directly into the model validation process. It transforms “data transparency” from a buzzword into a set of documented, reviewable engineering artifacts.

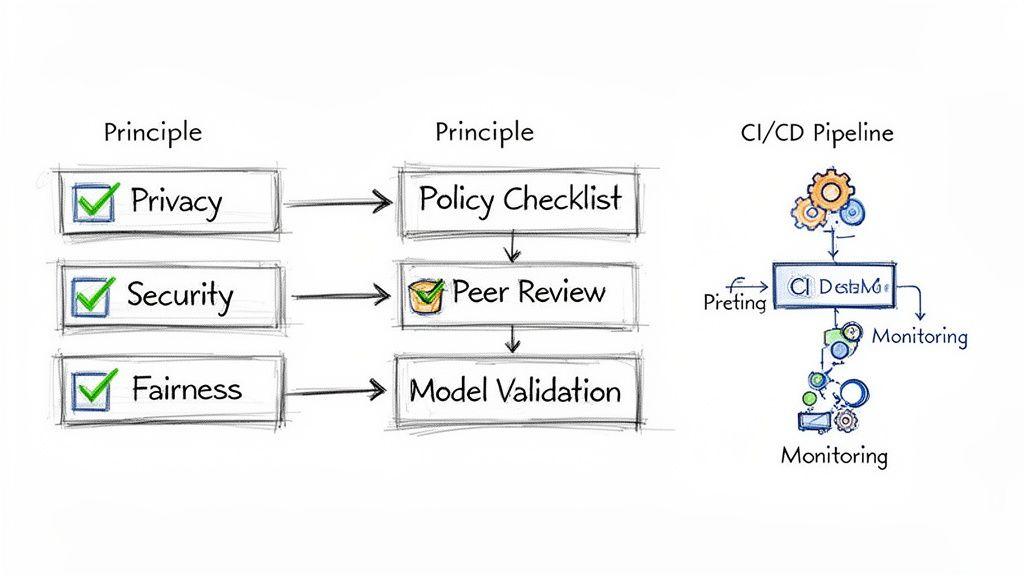

Translating Principles Into Engineering Policies

A principle without a policy is merely a suggestion. The real work lies in bridging the gap between high-level tenets like “Privacy as an Architectural Choice” and the day-to-day software development lifecycle. This involves embedding your code of conduct directly into the tools and workflows your engineering teams already use.

The goal is not to add bureaucracy but to integrate ethical guardrails so seamlessly that they become a natural part of the engineering process. When implemented correctly, doing things the “right way” becomes the path of least resistance.

This integration is where a code of conduct becomes an active engineering asset that helps manage risk before a single line of code is deployed.

From Principle to Pull Request

The most effective policies meet developers where they work. Instead of creating separate, out-of-band review processes, modify existing ones. The pull request (or merge request) is the ideal place to start.

For a principle like “Security by Default,” you can modify your pull request template to include a mandatory checklist:

- Secure Coding Standards: Is all user input validated and sanitized to prevent injection attacks?

- Dependency Scans: Has a vulnerability scan run on all new third-party libraries? Are there zero critical vulnerabilities?

- Secret Management: Have all hardcoded secrets (API keys, passwords) been removed and replaced with references to a secure vault?

By making this checklist a requirement for merging code, you transform a high-level security principle into a non-negotiable engineering task. This provides immediate, context-specific feedback within the developer’s workflow.

AI Model Validation Protocols

For AI teams, policies must address the unique risks of model development. A principle like “Algorithmic Fairness” requires a rigorous validation protocol that every model must pass before deployment.

This protocol should be a formal, documented process that includes:

- Data Source Auditing: Document the origin, composition, and known limitations of the training data. Assess whether the data is representative of the target user population.

- Bias Metric Testing: Define and measure specific fairness metrics (e.g., demographic parity, equalized odds) relevant to the model’s use case. If a model fails to meet a predefined threshold, it does not proceed.

- Explainability Reports: Generate and archive a model explainability report (using tools like SHAP) that shows which features are driving its predictions.

- Human-in-the-Loop Triggers: Define the conditions under which a model’s output must be escalated for human review, such as low confidence scores or outlier predictions.

This protocol acts as a gatekeeper, ensuring no model is deployed without undergoing a thorough, repeatable ethical and technical review.

A policy is not about punishment; it is about creating guardrails. The goal is to make it organizationally difficult to ship a product that violates your core principles. It is an architectural choice for your entire development process.

A Real-World Scenario: AI Feature Development

Consider how a code of conduct guides a team building an AI-powered feature to classify customer support tickets.

The team begins with data sourcing. The “Transparent Data Handling” principle requires them to first filter and anonymize any personally identifiable information (PII) not strictly necessary for the model, rather than scraping all historical tickets.

During model training, the “Algorithmic Fairness” policy requires the team to run tests ensuring the model performs equally well across different customer segments, preventing scenarios where non-native English speakers receive poorer classification accuracy.

Before deployment, the pull request checklist enforces the “Security by Default” principle, requiring a peer review to confirm the model’s API endpoint has proper authentication and is protected against denial-of-service attacks. This methodical, policy-driven approach turns abstract ethical goals into concrete engineering outcomes.

The growing emphasis on Corporate Social Responsibility (CSR) in Italy reflects this trend, with a reported 96% of businesses focusing on it by 2021. This movement is closely linked to adopting codes of conduct that address key issues like transparency and anti-corruption, as seen in frameworks mandated under Legislative Decree 231/2001. These codes require strict compliance, monitoring, and training to embed ethical practices into daily operations. You can learn more about business etiquette in Italy and its connection to these ethical codes.

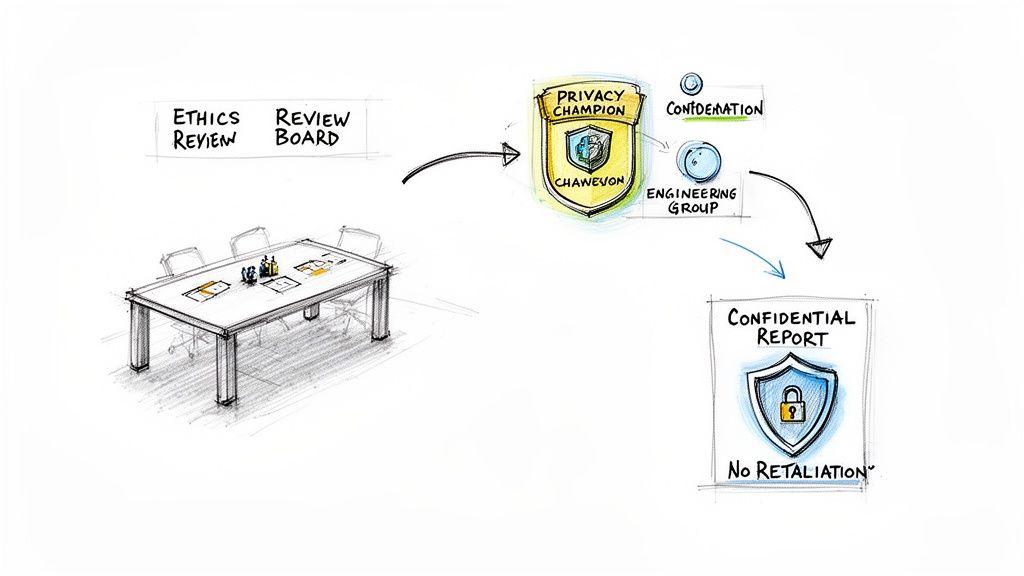

Establishing Clear Governance and Reporting Mechanisms

A code of conduct without clear ownership is an unenforced document. To have a real impact, it requires a well-defined governance structure and a trusted reporting process. The goal is not to create bureaucracy but to establish lightweight, practical mechanisms that encourage accountability and open communication.

Without this structure, the code remains a statement of intent. When ethical dilemmas arise—such as a conflict between a business goal and a privacy principle—teams need a clear path for guidance and a safe channel to report concerns without fear of reprisal. A vague or non-existent process guarantees the code will be ignored when it is needed most.

Defining Roles and Responsibilities

Effective governance starts with clear responsibilities. Avoid creating a slow, formal committee that becomes a bottleneck. Instead, aim for agile, cross-functional roles that integrate into your existing organizational structure.

Here are two practical models:

- The Privacy Champion: Appoint a dedicated individual within the engineering or product team. This person serves as the first point of contact for questions about the code, helps guide new projects, and advocates for privacy by design during technical reviews.

- A Lightweight Ethics Review Board: Form a small, cross-functional group with representatives from engineering, product, legal, and leadership. This board does not approve routine tasks but convenes to review high-risk projects, resolve major ethical conflicts, and oversee updates to the code itself.

Distributing ownership is key. When engineers see their peers involved in governance, it reinforces that the code is a shared technical responsibility, not a top-down mandate from compliance.

Designing a Safe and Transparent Reporting Process

A reporting channel is only effective if it is trusted. Team members must feel psychologically safe to raise concerns, confident that their report will be taken seriously and that there will be no retaliation. A superficial process is worse than none at all; it breeds cynicism and forces problems underground.

The process should be built on three pillars:

- Confidential and Accessible Channels: Offer multiple, clearly communicated methods for reporting issues, such as a dedicated email alias, a simple form on the company intranet, or direct contact with a designated Privacy Champion or HR partner. Confidentiality is the absolute priority.

- A Transparent Investigation Procedure: Outline the exact steps that follow a report. The procedure should define who investigates the concern, the expected timeline for a response, and how findings will be documented and communicated to the individual who raised the issue.

- Absolute Protection Against Retaliation: Implement an explicit, zero-tolerance policy. State clearly that any form of retaliation against someone for reporting a concern in good faith will result in immediate disciplinary action. This commitment must be visibly supported by leadership to build genuine trust.

Governance is not about control; it is about creating clarity and support. The purpose of these mechanisms is to empower teams to make the right decisions proactively and to provide a safety net for complex ethical challenges.

The structure you choose should reflect your company’s size and culture. Whether you opt for a single champion or a small board, the goal is the same: to make your code of conduct a living document supported by a trusted human process. For organizations needing guidance, specialized services can provide a clear path to establishing robust governance.

Integrating Your Code with GDPR and NIS2 Compliance

For a modern tech company, a code of conduct is a critical compliance asset. When structured correctly, it becomes a central pillar for meeting regulations like GDPR, NIS2, and the EU AI Act. It provides the mechanism to translate abstract legal requirements into concrete engineering principles, streamlining audits and demonstrating a commitment to compliance.

By weaving compliance into your code of conduct, you shift it from a reactive, separate task to a proactive, architectural concern. This demonstrates that your organization builds compliance into its products and culture, rather than merely documenting it.

Mapping code of conduct Principles to GDPR

The General Data Protection Regulation (GDPR) is built on principles that should be reflected in your system architecture. A well-crafted code of conduct serves as the bridge between legal text and the practical realities of your development teams.

Consider these direct translations from legal requirements to engineering policies:

- Purpose Limitation: Your code can mandate that every new feature must have a clearly documented, legitimate purpose for processing personal data, directly implementing GDPR Article 5(1)(b) and preventing “just-in-case” data collection.

- Data Minimisation: A clause requiring systems to collect only the absolute minimum data needed for a specific function directly implements Article 5(1)(c). Data minimization becomes a non-negotiable requirement in design reviews.

- Storage Limitation: Your code can establish clear data retention policies, requiring automated deletion or anonymization of data once its purpose is fulfilled, addressing Article 5(1)(e).

When these GDPR tenets are embedded in your code, they become part of the daily engineering conversation. During a code review, a developer can ask, “Does this feature align with our principle of data minimization?” This transforms compliance from an abstract burden into a shared engineering responsibility. For a deeper look, our guide explains how to embed privacy by design into your architecture.

A strong code of conduct acts as a translation layer. It converts dense legal articles from regulations like GDPR into actionable rules that engineers can understand, implement, and verify within their existing workflows.

Aligning with NIS2 Security Mandates

The NIS2 Directive raises the bar for cybersecurity, particularly regarding supply chain security and incident response. Your **code of conduct **is the ideal place to codify your organization’s commitment to these higher security standards, translating regulatory demands into expected behaviors and technical controls.

Address NIS2 requirements with specific clauses:

- Incident Response Protocols: The code should mandate that all teams follow a predefined incident response plan. It can require engineers to participate in regular security drills to ensure they understand their role in reporting and mitigating security events, a core NIS2 requirement.

- Supply Chain Risk Management: NIS2 emphasizes managing risks from third-party suppliers and dependencies. Your code can include a policy requiring rigorous security vetting for all new software libraries and vendors, effectively prohibiting the use of unvetted open-source packages in production.

- Secure Development Practices: The code can enforce secure-by-default principles, making practices like automated vulnerability scanning and secrets management mandatory parts of the CI/CD pipeline. This provides a clear, auditable trail proving proactive steps to secure the software supply chain.

By linking your code directly to these directives, you create a clear line of sight from a regulatory obligation to day-to-day operational reality. This strengthens your security posture and provides solid evidence for auditors that your compliance efforts are systematic and integrated into how you work.

Maintaining and Evolving Your Code of Conduct

A **code of conduct **is not a static document. To remain a practical guide for product and engineering teams, it must be treated as a living artifact. Technology, regulations, and business objectives change, and the code must evolve alongside them to remain effective.

Without a defined lifecycle, even the best-written code will become obsolete. It will degrade from a framework for responsible innovation into a source of irrelevant bureaucracy. This is a significant risk: teams will ignore an outdated document, leaving critical ethical gaps in the development process.

Establishing a Regular Review Cycle

The cadence of reviews depends on your company’s pace, but an annual review is a good starting point. However, specific events should trigger an immediate re-evaluation, regardless of the schedule.

Consider a review essential after:

- A strategic product shift or entry into a new market.

- The introduction of a disruptive technology into your stack, such as large language models or generative AI.

- Significant changes in relevant regulations, like the EU AI Act.

- Feedback from an internal investigation that exposed a gap in the current code.

This proactive approach ensures your code of conduct remains aligned with the real-world challenges your teams face.

An effective code of conduct is not just reviewed; it is stress-tested against recent, difficult decisions. Ask your team leads: “In the last six months, did the code provide clear guidance on our most complex technical trade-offs? If not, where did it fall short?”

The review process should be transparent and inclusive, providing a clear channel for anyone on the team to propose changes. Continuous training is equally critical. Regular workshops based on real-world scenarios keep the code’s principles top-of-mind, embedding them in the company culture and ensuring the document remains a practical and trusted tool.

Frequently Asked Questions

When introducing a code of conduct in a dynamic tech environment, founders, CTOs, and compliance managers often have practical questions. Here are answers to common concerns.

How Can a Small Startup Implement This Without a Compliance Team?

For a startup, the key is integration and practicality, not bureaucracy.

Instead of a formal committee, start with a small, cross-functional group: a founder, a lead engineer, and a product manager. Their task is to define core principles tailored to the company’s specific technology and risks.

You can then appoint a “technical ethics lead” as a point of reference. The most effective step, however, is to integrate controls directly into existing workflows. For example, add a “privacy and ethics review” item to pull request templates. This makes the code of conduct a lightweight but persistent part of the culture.

What is the Difference Between a Technical Code of Conduct and HR Policies?

This is a critical distinction. Standard HR policies govern general employee behavior: anti-harassment rules, workplace conduct, and so on.

A technical code of conduct, by contrast, guides the specific ethical and professional decisions made while building a product.

It addresses concrete technical issues such as:

- Data privacy in system architecture.

- Algorithmic bias in AI models.

- Secure coding practices and supply chain management.

While HR policies are a necessary complement, the technical code governs the “how” and “why” of building technology responsibly.

How Should We Handle Conflicts Between Business Goals and the Code?

This is precisely where a well-defined **code of conduct **proves its value.

Instead of relying on ad-hoc decisions, it provides a pre-agreed framework for navigating difficult trade-offs. When a conflict arises, the reporting mechanism should be used to escalate the issue to the designated lead or review team.

Their role is to evaluate the dilemma transparently, based on the code’s principles. This process should be documented to reinforce that ethical standards are not sacrificed for short-term gains and to protect the company’s long-term integrity.

At Devisia, we believe a robust code of conduct is an architectural choice that builds more reliable and trustworthy systems. If you need to translate your business vision into maintainable digital products with integrated ethical principles, we can help. Learn more about our approach at https://www.devisia.pro.